AlphaEvolve, Multi-Agent Systems, and AI in Science: Research Highlights 2025

Intro: Why 2025 Matters for AI Research

It has been a year of significant advancements in the field of artificial intelligence. Outside of larger models, the research is exploring more in-depth into how AI can collaborate with humans, how systems can self-optimize, and even how AI can transform how science is discovered.

Simultaneously, evidence-based research is beginning to demonstrate that AI tools do not necessarily make us as productive as we think they do. In this blog, we will discuss the four influential 2025 papers that demonstrate the potential and the traps of contemporary AI.

AlphaEvolve: Can AI Invent New Algorithms?

The AlphaEvolve by Google DeepMind is a radical move towards AI-assisted discovery. With AlphaEvolve, instead of humans painstakingly creating new algorithms, a combination of large language models + evolutionary search is used to automatically create, test, and optimize algorithms.

Why it is exciting: Not only does it rediscover existing algorithms (to demonstrate that the method is working), but also suggests new ones, some of which perform better in tasks such as scheduling and matrix optimization than those designed by human beings.

The reason why it is worrying is because it is difficult to verify. The algorithms discovered in such a manner should be subject to stringent testing in order to guarantee reliability. The process of discovery continues to have human constraint issues.

💡Implication: This could be the beginning of the age of AI as a real partner in computer science, not a tool. However, trust and reproducibility will be key problems.

Compound AI Systems Optimization Survey

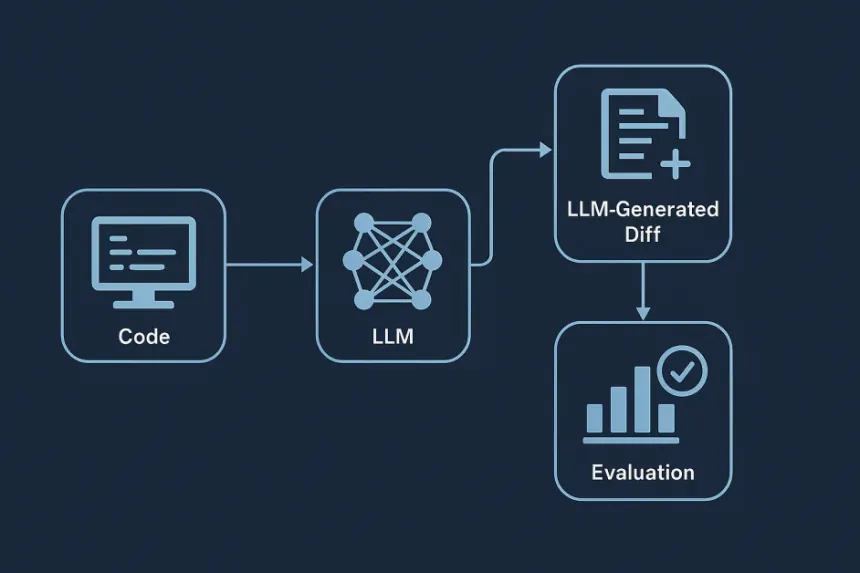

The most exciting AI applications today are not single models anymore, they are compound systems: consider an LLM being used along with retrieval modules, reasoning chains, external tools, and even other agents. However, it is significantly more difficult to optimize such systems than to optimize a single large model.

This 2025 survey divides techniques such as fine-tuning, reinforcement learning, natural-language feedback and black-box optimization of compound systems.

The difficulty: Little inefficiencies take immense effect when there are numerous elements at play. The open problem is to evaluate such systems in a holistic (not merely in a component-level only) way.

The opportunity: This study will become the playbook of turning multi-agent frameworks into scalable and reliable systems as more and more of them emerge.

💡 Implication: When LLMs are the brains, then the study of compound optimization is training us on how to create the nervous system to use with AI.

The Productivity Paradox: AI in Open-Source Development

In 2025 a field experiment was carried out to test whether it could be possible to increase the productivity of experienced open-source developers through AI coding assistants. Amazingly, the outcome was 19 per cent slowdown.

Why? Writers of AI-generated code took more time in prompting, verifying and debugging than writing.

Lesson learned: AI can be helpful, particularly when it comes to boilerplate or search, but it is not a silver bullet.

😊Implication: This paper bases the hype. To be fair, AI must be able to enhance productivity, which indeed requires requiring tools to decrease verification overhead, not increase it.

AI Transforming Science: A Survey

This is a full survey of the current integration of LLMs and multimodal models throughout the scientific pipeline: literature review, hypothesis generation, experiment design, and even peer review.

Opportunities: Rapid hypothesis testing, automatic figure generation, cross-disciplinary knowledge bridging.

Potential risks: Fake science, crisis of reproducibility, ethical issues with allowing AI excessive credit.

💡 Implication: AI ceases to be only a research topic but is becoming a research companion. The difficulty lies in making rigor and ethics match.

Conclusion: Promise & Pitfalls

The following four works demonstrate the twin reality of AI in 2025:

- AI has the potential to be creative as demonstrated by systems such as AlphaEvolve.

- Compound optimization polls help us remember that creating trustworthy AI ecosystems is equally difficult as the creation of the models.

- Research into the topic of developer productivity warns us against unthinkingness.

- And in science, AI is already transformative–but dangerous when it is unregulated.

👉 Last part: The big picture of 2025 is not only doing larger models, but also finding a way AI integrates into complex systems, workflows, and human lives. It is there that the true breakthroughs are to be found–and the most difficult challenges.

📬 Want to connect or collaborate? Head over to the Contact page or find me on GitHub or LinkedIn